Apache Hadoop YARN

Introduction

YARN stands for “Yet Another Resource Negotiator”, but it’s commonly referred to by the acronym alone; the full name was self-deprecating humor on the part of its developers. You can consider YARN as the brain of your Hadoop Ecosystem. Its fundamental idea is to split up the functionalities of resource management and job scheduling/monitoring into separate daemons.

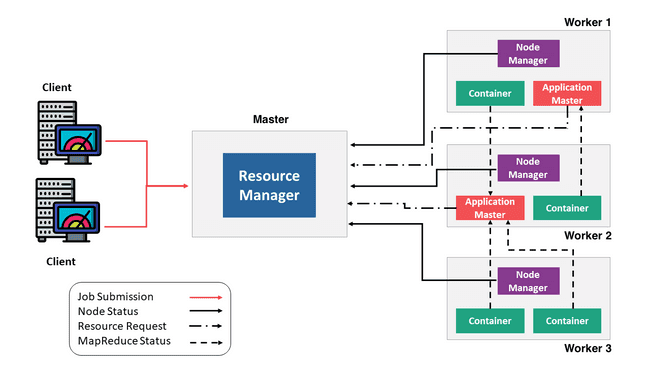

Architecture

Conceptually, a master node is the communication point for a client program. A master sends the work to the rest of the cluster, which consists of worker nodes.

In YARN the ResourceManager on a master node and the NodeManager on worker nodes form the data-computation framework. The ResourceManager is the ultimate authority that arbitrates resources among all the applications in the system. An application is either a single job or a DAG of jobs. The NodeManager is the per-machine framework agent who is responsible for containers, monitoring their resource usage (cpu, memory, disk, network) and reporting the same to the ResourceManager/Scheduler.

The image below represents the YARN architecture.

The main components of YARN architecture include:

- Client:

It submits MapReduce jobs.

- ResourceManager:

It is the master daemon of YARN and is responsible for resource assignment and management among all the applications. Whenever it receives a processing request, it forwards it to the corresponding NodeManager and allocates resources for the completion of the request accordingly. It has two major components:

- Scheduler:

It performs scheduling based on the resource requirements of the allocated applications and available resources. It is a pure scheduler, means it does not perform other tasks such as monitoring or tracking of status for the application. Also, it offers no guarantees about restarting failed tasks either due to application failure or hardware failures.

The Scheduler has a pluggable policy which is responsible for partitioning the cluster resources among the various queues, applications etc. The current schedulers such as the CapacityScheduler and the FairScheduler would be some examples of plugins.

- ApplicationsManager:

It is responsible for accepting the application and negotiating the first container from the ResourceManager. It also restarts the ApplicationMaster container if a task fails.

- NodeManager:

It take care of individual node on Hadoop cluster and manages application and workflow and that particular node. Its primary job is to keep-up with the ResourceManager. It monitors resource usage, performs log management and also kills a container based on directions from the ResourceManager. It is also responsible for creating the container process and start it on the request of Application master.

- ApplicationMaster:

The per-application ApplicationMaster has the responsibility of negotiating appropriate resource containers from the Scheduler, tracking their status and monitoring for progress.

- Container:

It is a collection of physical resources such as RAM, CPU cores and disk on a single node. The containers are invoked by Container Launch Context (CLC) which is a record that contains information such as environment variables, security tokens, dependencies etc.

YARN supports the notion of resource reservation via the ReservationSystem, a component that allows users to specify a profile of resources over-time and temporal constraints (e.g., deadlines), and reserve resources to ensure the predictable execution of important jobs.The ReservationSystem tracks resources over-time, performs admission control for reservations, and dynamically instruct the underlying scheduler to ensure that the reservation is fulfilled.

In order to scale YARN beyond few thousands nodes, YARN supports the notion of Federation via the YARN Federation feature. Federation allows to transparently wire together multiple yarn (sub-)clusters, and make them appear as a single massive cluster. This can be used to achieve larger scale, and/or to allow multiple independent clusters to be used together for very large jobs, or for tenants who have capacity across all of them.

How YARN works?

Application execution consists of the following steps:

- Application submission

- Bootstrapping the ApplicationMaster instance for the application

- Application execution managed by the ApplicationMaster instance

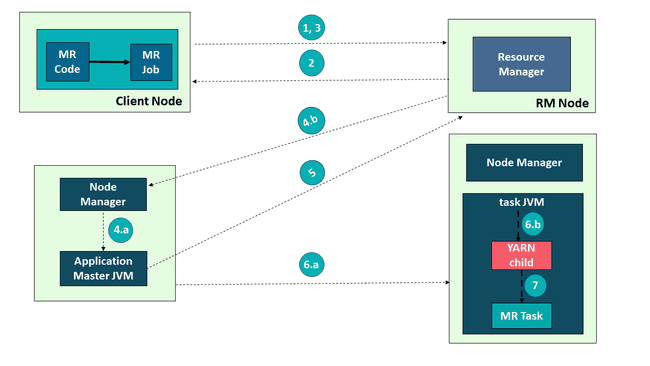

Application submission in YARN

A YARN job or an application can be submitted to the cluster by the command yarn jar with options. On the image below there are the steps involved in application submission of Hadoop YARN:

-

Submit the job

-

Get Application ID

-

Application submission context

a) Start Container Launch

b) Launch Application Master

-

Allocate Resources

a) Container

b) Launch

- Execute

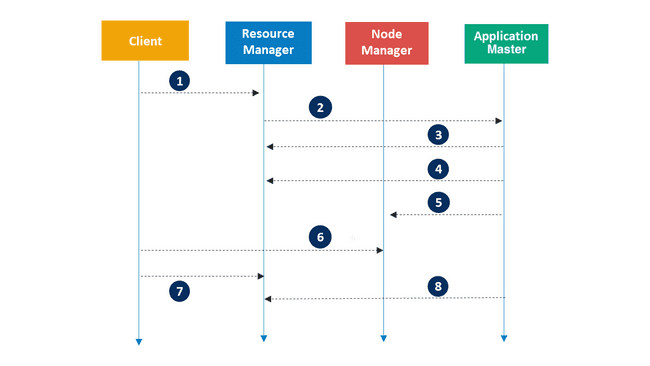

Application execution in YARN

Let’s walk through an application execution sequence (steps are illustrated in the diagram):

- A client program submits the application, including the necessary specifications to launch the application-specific ApplicationMaster itself.

- The ResourceManager assumes the responsibility to negotiate a specified container in which to start the ApplicationMaster and then launches the ApplicationMaster.

- The ApplicationMaster, on boot-up, registers with the ResourceManager – the registration allows the client program to query the ResourceManager for details, which allow it to directly communicate with its own ApplicationMaster.

- During normal operation the ApplicationMaster negotiates appropriate resource containers via the resource-request protocol.

- On successful container allocations, the ApplicationMaster launches the container by providing the container launch specification to the NodeManager. The launch specification, typically, includes the necessary information to allow the container to communicate with the ApplicationMaster itself.

- The application code executing within the container then provides necessary information (progress, status etc.) to its ApplicationMaster via an application-specific protocol.

- During the application execution, the client that submitted the program communicates directly with the ApplicationMaster to get status, progress updates etc. via an application-specific protocol.

- Once the application is complete, and all necessary work has been finished, the ApplicationMaster deregisters with the ResourceManager and shuts down, allowing its own container to be repurposed.

Example of a YARN job submission

To demonstrate how to submit a YARN job and extract information about its running parameters through the YARN UI, we will use one of the benchmarking tool as an example application - TeraSort benchmark - that are included in the Apache Hadoop distribution and therefore represented in the Adaltas Clouds cluster.

Hadoop TeraSort is a well-known benchmark that aims to sort big volume of data as fast as possible using Hadoop MapReduce. TeraSort benchmark stresses almost every part of the Hadoop MapReduce framework as well as the HDFS filesystem making it an ideal choice to fine-tune the configuration of a Hadoop cluster. This benchmark consists of 3 components that run sequentially:

- TeraGen - generates random data

- TeraSort - does the sorting using MapReduce

- TeraValidate - used to validate the output

The following steps will show you how to run the TeraSort benchmark on the Hadoop Adaltas Cloud cluster:

[[info | Clean up]] | After performing all these steps, don’t forget to delete input and output directories for TeraSort, so that the next benchmark can be run without any storage issue.

- TeraGen

The first step of the TeraSort benchmark is the data generation. You can use the teragen command to generate the input data for the TeraSort benchmark. The first parameter of teragen is the number of records and the second parameter is the HDFS directory to generate the data. The following command generates 1 GB of data consisting of 10 million records to the tera-in directory in HDFS:

yarn jar \

/usr/hdp/3.1.0.0-78/hadoop-mapreduce/hadoop-mapreduce-examples-*.jar \

teragen 10000000 tera-inOnce you’ve submitted a YARN application you can monitor its execution via YARN ResourceManager UI described in the next section. It also will output a log information to the console. Where after establishing connection and passing Kerberos authentication, you can see a job submission log and its execution process with the percentage of the Map and Reduce tasks performed. After the job is finished it will output a summary information about executed process and the used resources:

...

20/06/17 18:11:14 INFO impl.YarnClientImpl: Submitted application application_1587994786721_0137

20/06/17 18:11:14 INFO mapreduce.Job: The url to track the job: http://yarn-rm-1.au.adaltas.cloud:8088/proxy/application_1587994786721_0137/

20/06/17 18:11:14 INFO mapreduce.Job: Running job: job_1587994786721_0137

20/06/17 18:11:21 INFO mapreduce.Job: Job job_1587994786721_0137 running in uber mode : false

20/06/17 18:11:21 INFO mapreduce.Job: map 0% reduce 0%

20/06/17 18:11:30 INFO mapreduce.Job: map 50% reduce 0%

20/06/17 18:11:32 INFO mapreduce.Job: map 100% reduce 0%

20/06/17 18:11:32 INFO mapreduce.Job: Job job_1587994786721_0137 completed successfully

20/06/17 18:11:32 INFO mapreduce.Job: Counters: 33

File System Counters

FILE: Number of bytes read=0

FILE: Number of bytes written=483096

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

...

Job Counters

Launched map tasks=2

Other local map tasks=2

Total time spent by all maps in occupied slots (ms)=81080

...

Map-Reduce Framework

Map input records=10000000

Map output records=10000000

Input split bytes=167

Spilled Records=0

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=236

CPU time spent (ms)=20050

...

File Input Format Counters

Bytes Read=0

File Output Format Counters

Bytes Written=1000000000- TeraSort

The second step of the TeraSort benchmark is the execution of the TeraSort MapReduce computation on the data generated in step 1 using the following command. TeraSort reads the input data and uses MapReduce to sort the data. The first parameter of the terasort command is the input of HDFS data directory, and the second part of the terasort command is the output of the HDFS data directory.

yarn jar \

/usr/hdp/3.1.0.0-78/hadoop-mapreduce/hadoop-mapreduce-examples-*.jar \

terasort tera-in tera-out- TeraValidate

The last step of the TeraSort benchmark is the validation of the results. TeraValidate validates the sorted output to ensure that the keys are sorted within each file. If anything is wrong with the sorted output, the output of this reducer reports the problem. This can be done using the teravalidate application as follows. The first parameter is the directory with the sorted data and the second parameter is the directory to store the report containing the results.

yarn jar \

/usr/hdp/3.1.0.0-78/hadoop-mapreduce/hadoop-mapreduce-examples-*.jar \

teravalidate tera-out tera-validateIn the result of these 3 steps we submitted 3 YARN applications sequentially.

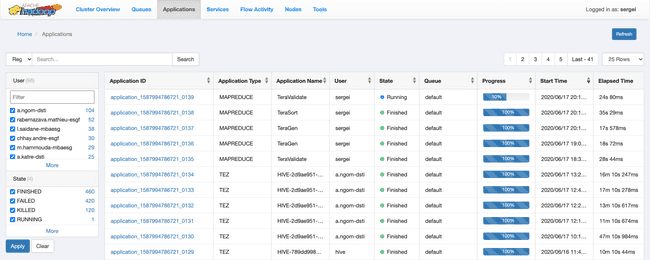

Monitoring with YARN ResourceManager UI

You can monitor the application submission ID, the user who submitted the application, the name of the application, the queue in which the application is submitted, the start time and finish time in the case of finished applications, and the final status of the application, using the ResourceManager UI.

To access the ResourceManager web UI in Adaltas Cloud refer to this link - http://yarn-rm-1.au.adaltas.cloud:8088/ui2.

[[info | Notice]] | The ResourceManager UI is Kerberos protected, and you need to pass the Kerberos authentication to get in.

- Monitoring applications

You can search for applications on the Applications page.

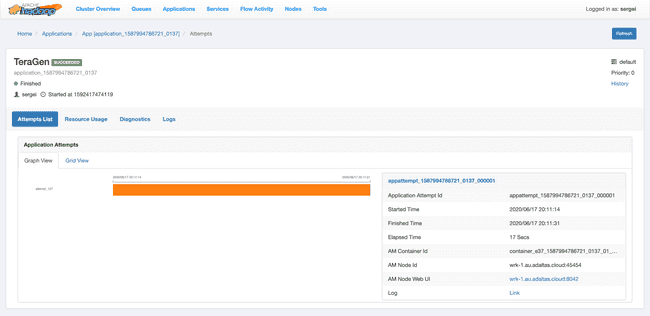

In the precedent example we submitted the TeraGen application with the ID application_1587994786721_0137. To view the details refer to its link.

In the Application List tab we can see all the attempts that were performed while executing this application:

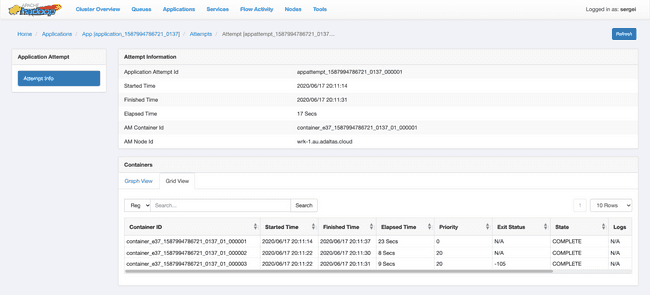

The detail information about each attempt is available on the Attempt Info page, where you can explore how many containers were launched and on what nodes:

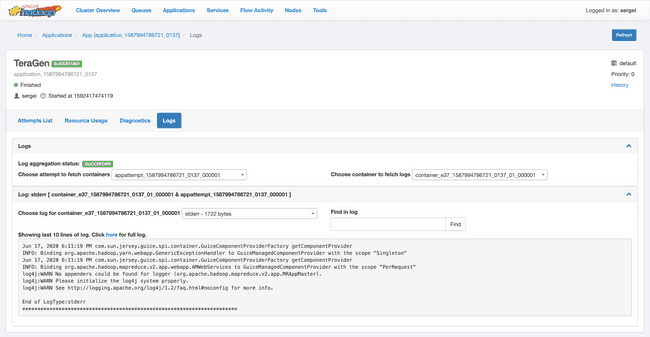

The log information can be extracted in the Logs tab. You can specify the log of what attempt and of what container you want to extract, either what type of log selecting the available filter fields:

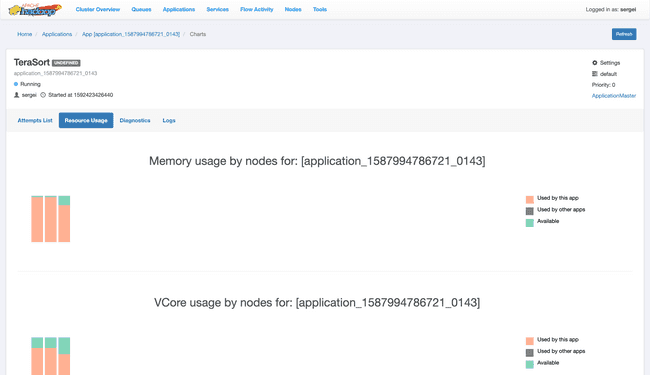

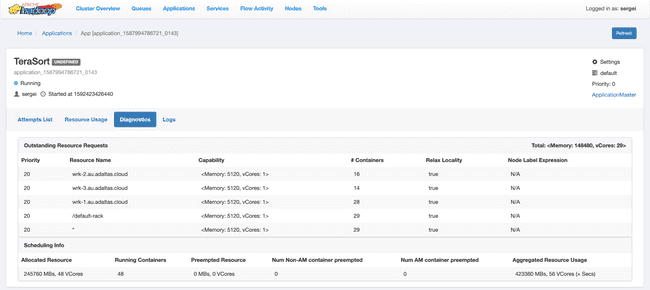

Also, in the tabs Resource Usage and Diagnostic you can observe information during application execution. Here is some screenshot examples:

- Monitoring cluster

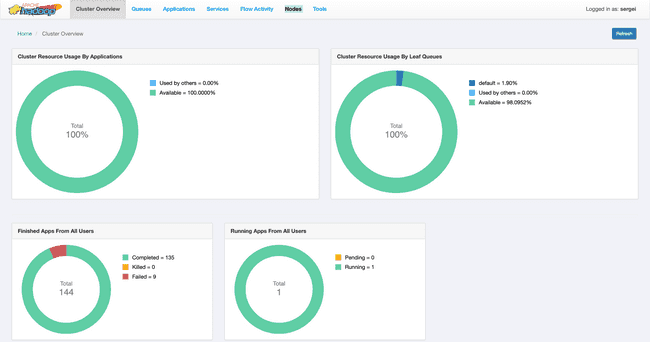

The Cluster Overview page shows cluster resource usage by applications and queues, information about finished and running applications, and usage of memory and vCores in the cluster.

- Monitoring nodes

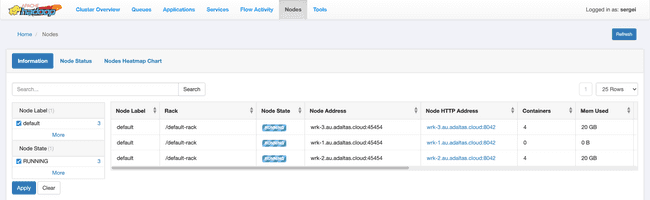

The Nodes page on the YARN Web User Interface enables you to view information about the cluster nodes on which the NodeManagers are running.

- Monitoring queues

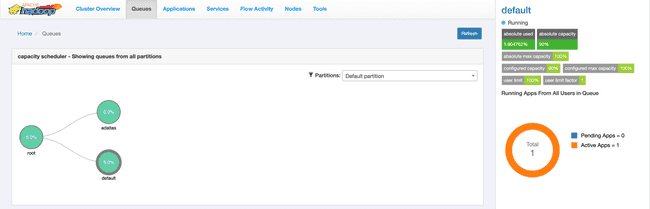

The Queues page displays details of YARN queues. You can either view queues from all the partitions or filter to view queues of a partition.

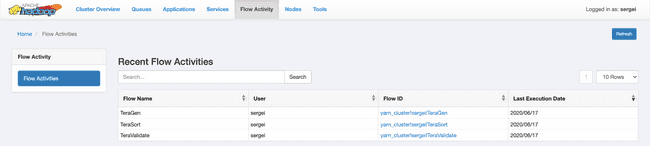

- Monitoring flow activity

You can view information about application flows from the Flow Activities page.

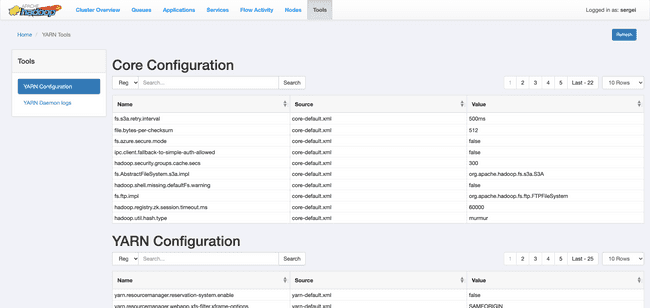

- Tools

You can view the YARN configuration and YARN Daemon logs on the Tools page.