Spark Introduction

Spark Definition

Spark is an open source framework used for distributed processing. It can be used to develop applications in Java, Python, Scala, which needs to be compiled and deployed or via a command line prompt in Scala or Python for testing and discovery purpose. It’s designed to split a job in tasks running on severals servers at the time, turning a long mono task on a large dataset into smaller tasks on portion of data.

Spark on Yarn

In our context, Spark is running on Yarn, a resource manager. To learn more about Yarn Yarn is used to manage computing (CPU) and memory (RAM) resources, allocating the right amount of each for every job running on the cluster. You can define queues to make sure a pool of job will have a minimum amount of resource or won’t go above a threshold.

Run a Spark Job

Run a job from command line

First you need to move the file from your local computer to the cluster environment.

scp spark-default-1.0.jar <user>@edge-1.au.adaltas.cloud:/home/<user>/Spark command line to run a job

spark-submit --class org.apache.spark.examples.SparkPi \

--master yarn \

--deploy-mode cluster \

--driver-memory 4g \

--executor-memory 2g \

--executor-cores 1 \

--queue thequeue \- —master: Let you define how you want to run the job, it could be localhost or a defined Spark master URL. In our case, Spark is running on Yarn.

- —class: Give the full name of the class you are trying to run. In that case the class is SparkPi under the package org.apache.spark.examples

- —deploy-mode: Whether to deploy your driver on the worker nodes (cluster) or locally as an external client (client). To be able to see logs and debug directly you should use client. You can also see logs when running in cluster mode but you’ll have to go to the SparkUI

Other arguments are used to tune the resource you want to use, note that it won’t override the limits set for your user or your queue.

When your job is running, you can use the command line to see the status.

yarn application --listThis allow you to see running jobs and the applicationID associate. This ID can be used to perform other operations on the job like stopping it.

yarn application --kill <application_id>Now if you want to experiment with Spark, you can use Spark Shell

Spark Shell

From a SSH session to the cluster

spark-shellSparkUI

SparkUI is a web interface listing all running jobs and showing different information like resources used, number of tasks completed, number of executors etc… You can access it here.

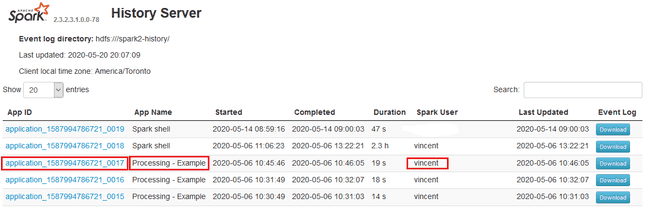

On this page you can see the application ID, the app name and the user for each job.

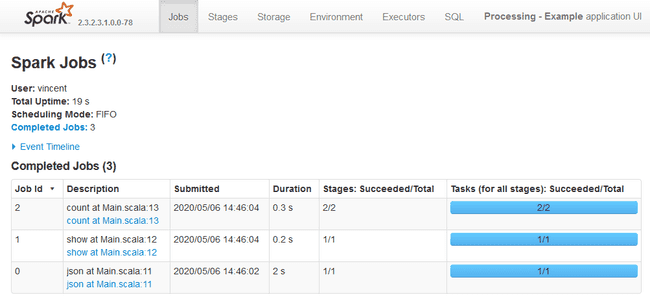

By clicking on the application ID you want to monitor you get to a detailed page with various information such as time per execution, how the job was splitted and in what order it was executed.

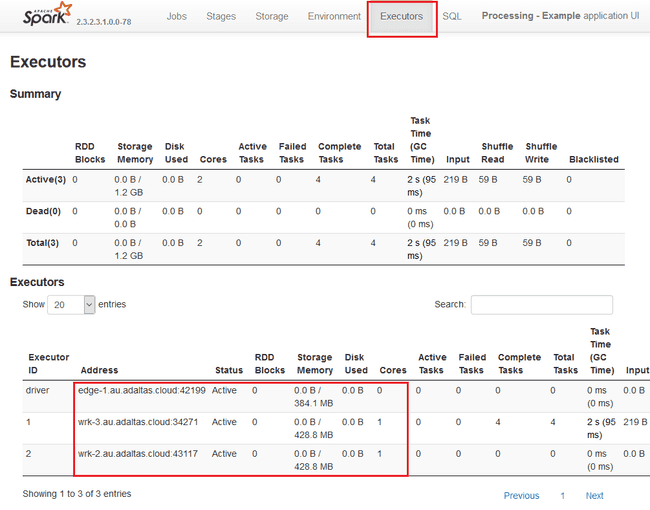

If you look at the Executor tab at the top, you will find information on where did you job ran and how much resources were used.

Spark Logs

Like said previously, if you want to access jobs logs, you have to go through yarn ressource manager

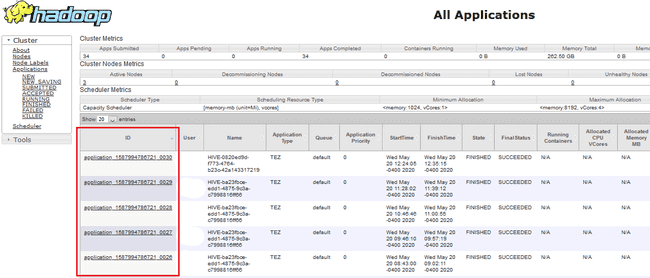

You find a list of jobs as well, but it includes all the jobs ran or running on Yarn, not just Spark.

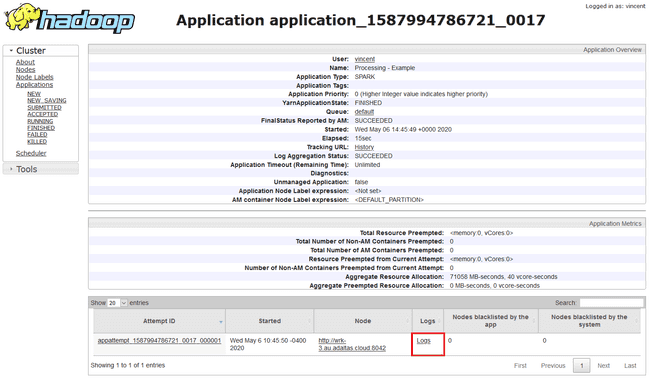

By clicking an ID you get to a similar detailed view of your job and you can access the logs for each attemp.