Hadoop HDFS

HDFS stands for Hadoop Distributed File System. It is a highly available, distributed file system for storing very large amounts of data, which is organized in clusters of servers. The data is stored on several computers (nodes) within a cluster, which is done by dividing the files into data blocks of fixed length and distributing them redundantly across the nodes.

The HDFS architecture is composed of master and slave nodes. The master nodes, called the NameNode, is responsible for processing all incoming requests and organizes the storage of files and the associated metadata in the the slave nodes, called the DataNodes. The system is designed for large amounts of data and can handle the storage of several million files.

HDFS is optimized to store large file, from a few megabytes to several gigabytes and more. Files are split into chunks. Chunks are stored into various location of the cluster and each chunks is replicated multiple times. The chunk size and their replications number is configurable, they respectively default to 256MB and 2 on the Adaltas Cloud Hadoop cluster. This values can have a major impact on Hadoop’s performance.

Refer to this link to see the datanode information on the Adalatas Cloud cluster - http://hdfs-nn-1.au.adaltas.cloud:50070/dfshealth.html#tab-datanode

The main characterists of HDFS are:

- Fault-tolerant: Each file is split into blocks of a configurable size (64MB or 128MB). These blocks are replicated across different machines based on a replication factor assuring that if a machine gets down, the data contained in its blocks can be served from another machine.

- Write Once Read Many principle: Data is transferred as a continuous stream when an HDFS client asks for it. It implies that HDFS does not wait to read the entire file and sends the data as soon as it reads it making the data processing efficient. On the other hand, HDFS is immutable: no update/modification can be performed on a file hosted in HDFS.

- Scalable: There are no limitation in number of machine regarding the storage capacity. Also, notice that HDFS is made for big files. Having a lot of small files consumes idle RAM space on master nodes and may decrease the performance of HDFS operations.

- Highly Available: HDFS is built with an automated mechanism to detect and recover from faults. Its distributed structure makes it a resilient product.

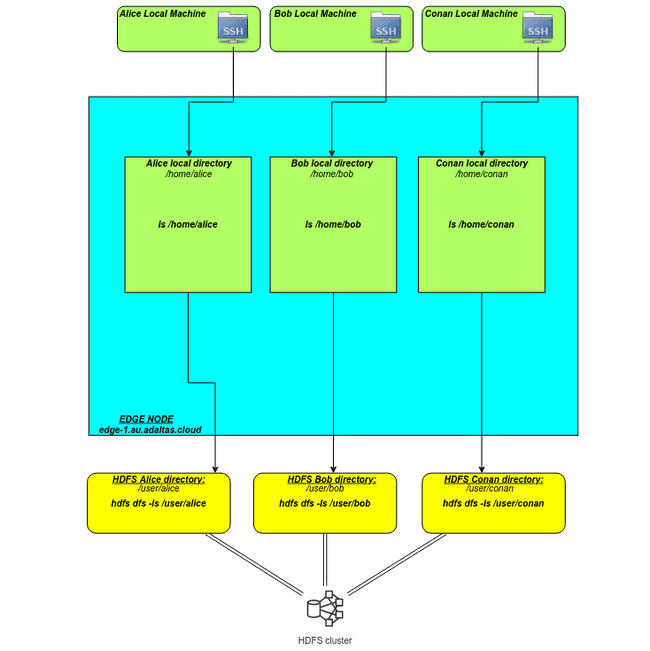

HDFS, your local machine and edge node

As presented earlier, HDFS is a software which works in a cluster environment. It consists in joining multiple machines to act as a group. Since it resides inside a cluster, the users must to connect from a client. Your local machine can be configured as a client by downloading the Hadoop libraries and setting up the right configuration files. For conveniency, we provide a pre configured environment on a remote machine which we call the edge node.

One can notice that:

- Nothing is stored on the local machine.

- You connect from your local machine to the “edge node” using SSH.

- When a file needs to be retrieved from the web and stored on HDFS:

- Download the file on the “edge node”.

- Write it to HDFS.

- If you download heavy files, always pipe them directly into HDFS and never attempt to write it temporarily on the edge node. If you are not familiar with Unix pipe, an example is shown below.

The following graph shows the differences:

Tutorial objectives

A prerequisite to execute the following is to be connected to the edge-1 node via SSH.

In this tutorial you will:

- Discover the HDFS basic commands

- Example 1 - create a file on the “edge node” and move it to HDFS

- Example 2 - pipe a downloaded book file with

curldirectly into HDFS

HDFS basic commands

The list of all the available options can be retrieved by typing hdfs dfs -help on the edge node.

You can also refer to this documentation.

The following commands are used very often:

hdfs dfs -mkdir <XxX>- create a directory in HDFS at the user local root folderhdfs dfs -put <src> <dst>- upload object(s) to HDFShdfs dfs -cp <src> <dst>- copy a filehdfs dfs -mv <src> <dst>- move a file (also used to rename a file)hdfs dfs -rm <object>- delete an file (use-rto delete a folder)

Example 1

In this example we will create a file on the “edge node” and move it to HDFS.

Let’s create a drivers.csv file. To do that, copy and run the following block on the “edge node”:

cat <<EOF > drivers.csv

10,George Vetticaden,621011971,244-4532 Nulla Rd.,N,miles

11,Jamie Engesser,262112338,366-4125 Ac Street,N,miles

12,Paul Coddin,198041975,Ap #622-957 Risus. Street,Y,hours

13,Joe Niemiec,139907145,2071 Hendrerit. Ave,Y,hours

14,Adis Cesir,820812209,Ap #810-1228 In St.,Y,hours

15,Rohit Bakshi,239005227,648-5681 Dui- Rd.,Y,hours

EOFThis file is stored on your user folder on the “edge node”, you can print it with the command:

cat drivers.csvNow, let’s create an HDFS subfolder, run sequentially:

# Creates a directory named "drivers_data"

hdfs dfs -mkdir drivers_data

# Upload the file into the subfolder

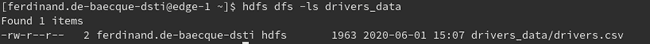

hdfs dfs -put drivers.csv drivers_dataYou now can list the files in the created directory by the command:

hdfs dfs -ls drivers_dataIt will output something similar as this:

The file is now stored in HDFS, we don’t need to keep the original one in our local folder on the “edge node”. To delete it run:

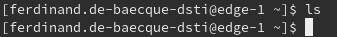

rm ./drivers_csvSo, you can check that it was deleted by listing the files:

Example 2

In this example we will pipe a downloaded book file with curl directly into HDFS.

# TODO : find the good link and the good file name.

curl -sS https://www.google.com/robots.txt | hdfs dfs -put - /robots.txt